Delivery Details

On this page, you will find information regarding the various methods available for receiving your data feeds and details about the organization of the folder where the deliverables are stored.

Delivery options

Placer offers various options to gain access to its POI analytics insights exported as CSV files. Customers can choose one of the following delivery options as per the availability of each feed type.

Delivery option | Available for feeds |

Migration trends | |

Migration trends | |

Migration trends |

Customer-owned bucket

Amazon Web Services (AWS) S3

When choosing an S3 delivery, Placer will need the following:

Share your S3 bucket with Placer

- Create or reuse an S3 bucket for receiving the export files. Provide that path to Placer.

- Create (or use existing) AWS role which has the following permissions on the target delivery bucket

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::{{bucket goes here}}",

"arn:aws:s3:::{{bucket goes here}}/*"

]

}

]

}- Provide to your Customer Success Manager the AWS Role ARN (Customer Role) that will be used for the delivery. The Customer Role will need to be granted with assume role permissions to interact with Placer’s Role. For that, create the following policy on your AWS Account:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowAssumeRoleSid",

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "arn:aws:iam::<accountId>:role/<roleName>"

}

]

}- Placer will provide you with the ARN of the Placer Role in return.

Google Cloud Storage (GCS)

Share your GCS bucket with Placer

- Create a Google Cloud Storage bucket

- Create a new Service Account (or use an existing account) and provide your Placer account manager the credentials file associated with the account (JSON file)

- Provide

roles/storage.objectUserpermission for the bucket, to the newly created Service Account - All done! Once enabled, Placer's data will be uploaded to the desired bucket.

Microsoft Azure sync

Placer currently support only export to Microsoft Azure customer-owned buckets.

To sync your exports with Microsoft Azure, follow these steps in your Microsoft Azure account:

- Create a dedicated (or use existing) Azure container

- A role with Microsoft.Storage/storageAccounts/listkeys/action permission

- Provide Placer with the bucket information:

- Storage account name

- Storage account key

- path to store the data feed (container name and internal path)

For Azure SAS, please provide Placer with the SAS URL.

Email

Placer can grant you access to your data feed via a temporary storage link delivered to mail recipients of your choice.

Contact your customer success manager to request this option.

Snowflake

Several of Placer's data feeds are available for access via your Snowflake account. The easiest way to get started is to create an account in Placer's region:

- 'GCP US-CENTRAL1'

Placer can also support, usually with an additional charge, export to all AWS, Azure and GCP regions in US and EU.

Contact your customer success manager to set up a Snowflake view for you.

Delivery folders organization - V3

Relevancy noteThis section is relevant for the Premium export only.

- File path will be as follows:

'/placer-analytics/SCHEMA/EXPORT_TYPE/TIMESTAMP/ENTITY_TYPE/FILE_TYPE

Terms | Values | Description |

|---|---|---|

SCHEMA | bulk-export | The export SCHEMA. |

EXPORT_TYPE | daily, weekly, monthly, weekly-daily, monthly-daily, monthly-weekly | EXPORT_TYPE is a combination of frequency and aggregation defined for the feed. For example, a weekly export (generated once a week) with a daily level aggregation (each line represents a single day), will be defined as “weekly-daily”. If that export is defined with a weekly level aggregation (each line represents a single week’s aggregated metrics), the definition would be “weekly”. |

TIMESTAMP | Date in the format "YYYY-MM-DD" | Feed's generation date |

ENTITY_TYPE | chain, property | Each exported file represents an entity. And that entity has an ENTITY_TYPE. |

FILE_TYPE | metrics, metadata | Under each ENTITY_TYPE folder the files will be split based on the file types: Metrics files |

- Files are GZip compressed

- In case the feed is configured to include an explicit list of entities ( venues/complexes/billboards) the files will be located under the property folder in case the configuration was for a list of chains (include their sub-entities or not) the files will be located under the chain folder.

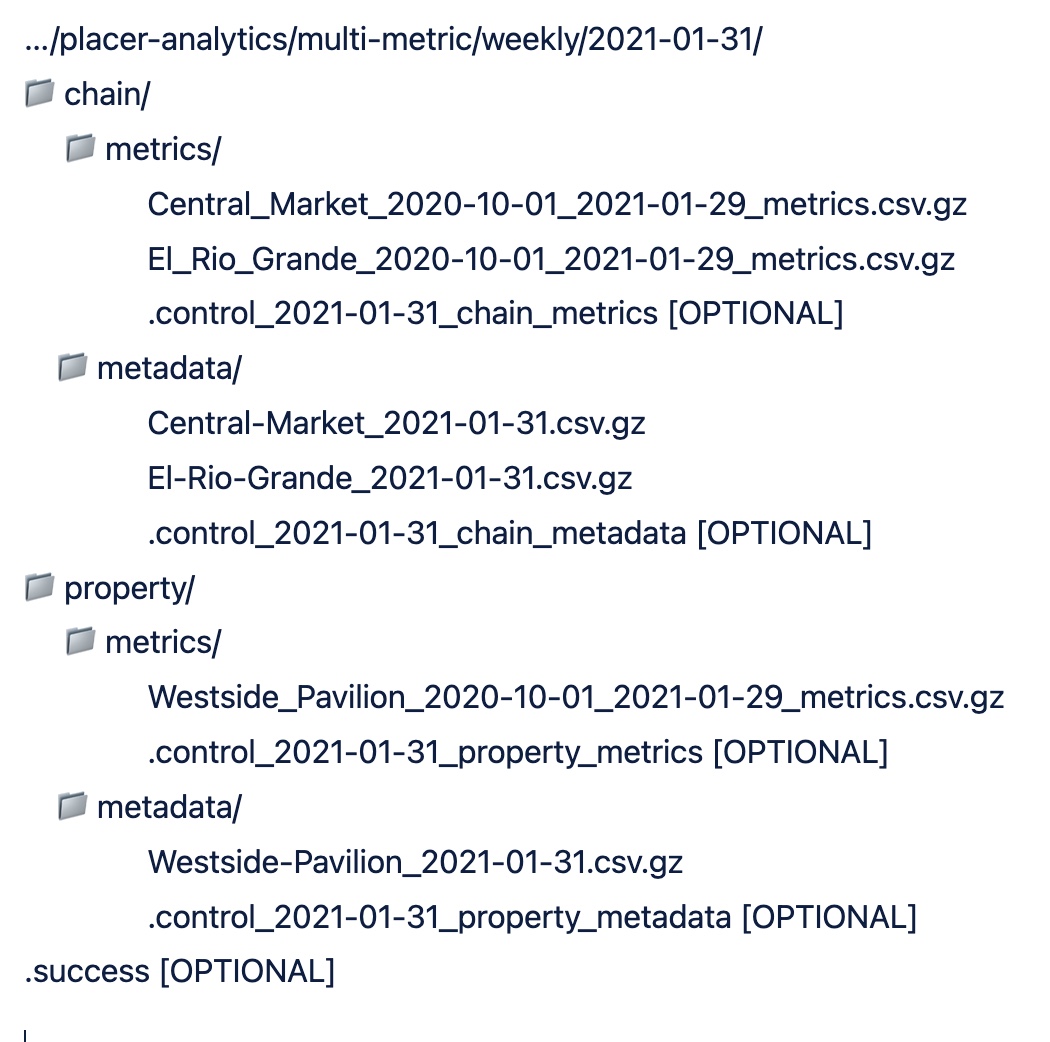

- Folders structure example:

Delivery folders organization - V4

Relevancy noteThis section is relevant for the Premium export, and Origins feeds.

- File path will be as follows:

'/placer-analytics/SCHEMA/EXPORT_TYPE/TIMESTAMP/FILE_TYPE/

Terms | Values | Description |

|---|---|---|

SCHEMA | bulk-export, visits-by-origin | The export SCHEMA. |

EXPORT_TYPE | daily, weekly, monthly, weekly-daily, monthly-daily, monthly-weekly | EXPORT_TYPE is a combination of frequency and aggregation defined for the feed. For example, a weekly export (generated once a week) with a daily level aggregation (each line represents a single day), will be defined as “weekly-daily”. If that export is defined with a weekly level aggregation (each line represents a single week’s aggregated metrics), the definition would be “weekly”. |

TIMESTAMP_STRING | Date in the format "YYYY-MM-DD" or Date and time in the format "YYYY-MM-DD_HHMMSS" | Feed's generation date and time. |

FILE_TYPE | metrics, metadata, regions_metadata | Under each ENTITY_TYPE folder the files will be split based on the file types: Metrics files |

- Files are GZip compressed

- File partitioning optimization is employed.

- File path examples

- "/placer-analytics/visits-by-origin/weekly/2025-02-20/metrics/"

- "/placer-analytics/bulk-export/daily/2025-02-20_153045/metadata/"

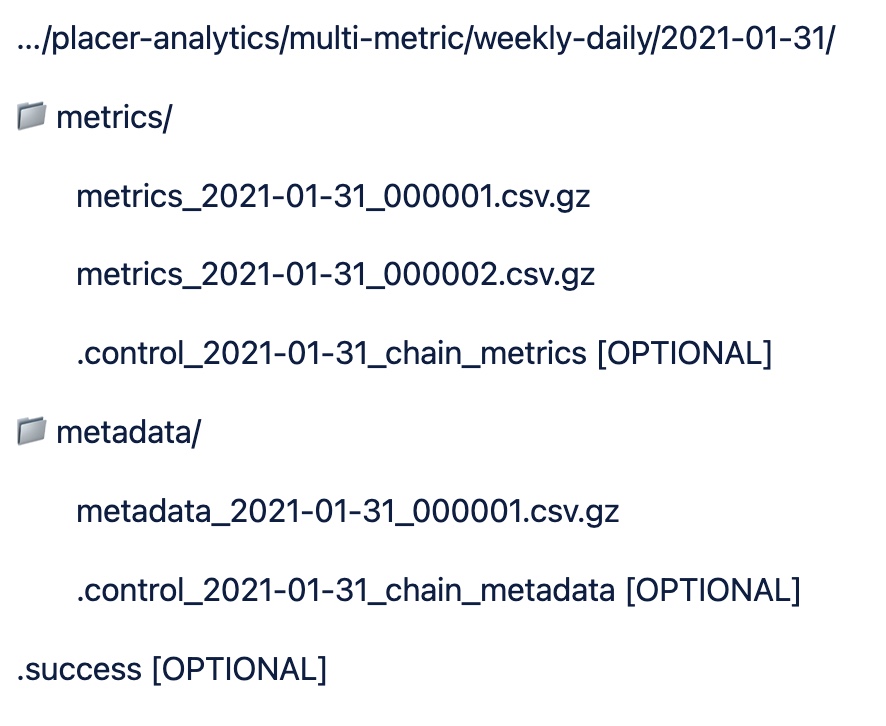

- Folders structure example:

Delivery Scheduling

Relevancy noteThis section is relevant for the Premium export and Origins feed.

Please find below the delivery schedule for each feed by its delivery frequency:

| Feed | Frequency | Delivery Scheduling |

|---|---|---|

| Premium export | Daily | Each day at around 7 am EST |

| Weekly | Every Thursday | |

| Monthly | On the 6th of each month | |

| Origins | Monthly | On the 6th of each month |

Delivery Completion Indication

To receive notifications upon the completion of the entire delivery and the availability of files for retrieval, Placer offers various notification choices:

- .success file - This file is the final file being delivered and it is empty; its arrival signals that all deliverables are ready for retrieval. You can find the file's location within the various available file structures above.

- Amazon Simple Notification Service - you can learn more about it here.

Updated 28 days ago